DYRGZN-05 Artificial Intelligence Experimental Device

Release time:2024-07-03 16:00viewed:times

Based on NVIDIA's powerful Al computing power, the system kernel is a small but powerful computer that allows you to run multiple neural networks, object detection, segmentation, and speech processing applications in parallel. The system is equipped with a quad-core cortex-A57 processor, a 128-core Maxwell GPU, and 4GB LPDDR memory, which brings enough Al computing power, provides 472GFLOP computing power, and supports a series of popular Al frameworks and algorithms, such as TensorFlow, Pytorch, caffe/caffe2, Keras, MXNET, etc.

System framework and AI framework

1. The system is pre-installed with the ubuntu18.04 operating system, and all environment code library files are installed and ready to use.

Ubuntu 18.04 LTS is extremely efficient in the field of cloud computing, especially for storage-intensive and computing-intensive tasks such as machine learning. Ubuntun long-term support version can get up to five years of official technical support from Canonical. Ubuntu 18.04 LTS will also come with Linux Kernel 4.15, which cont*ns fixes for Spectre and Meltdown errors.

2. Provide det*led Python open source example programs

According to the latest TIOBE ranking, Python has surpassed C# and has become one of the top 4 most popular languages in the world, along with Java, C, and C++. The number of B*du index searches in China has surpassed Java and C++, and is about to become the most popular development language in China.

Python is widely used in back-end development, game development, website development, scientific computing, big data analysis, cloud computing, graphics development and other fields; Python is in an advanced position in software quality control, improving development efficiency, portability, component integration, and rich library support. Python has the advantages of being simple, easy to learn, free, open source, portable, extensible, embeddable, and object-oriented. Its object-orientedness is even more thorough than Java and C#.net;

3. JupyterLab Programming

JupyterLab is a web-based interactive development environment for Jupyter notebooks, code and notebooks, code and data. JupyterLab is very flexible in configuring and arranging user interfaces to support a wide range of workflows in data science, scientific computing, and machine learning. JupyterLab is extensible and modular for writing plug-ins, adding new components, and integrating with existing components

4. Multiple AI frameworks

OpenCV computer vision library, TensorFlow AI framework, Pytorch AI framework, etc.

1. Basic technical parameter requirements:

1. Input power: AC 220V±10% 50HZ;

2. Output power: DC: +5V/4A, +12V/4A, including instantaneous short circuit protection and overcurrent protection;

3. Working environment: temperature -10~+40 degrees Celsius Relative humidity <85% (25 degrees Celsius) Altitude <4000m;

4. Device capacity: <0.5 KVA;

5. Weight: about 5 KG;

6. Dimensions: ≥610*440*240mm;

7. Modularity: The experimental project is modularized to facilitate later upgrades and modifications;

8. The experimental box cont*ns storage space inside, which can properly store modules and accessories. The opening method is to press and pop up;

9. The module PCB thickness is not less than 2mm, the panel is silk-screened with black background and white characters, and the experimental module devices are all installed on the front of the experimental box, which is convenient for students to identify and understand and m*nt*n later;

10. Experimental box: The outer box is made of aluminum-wood alloy material, and nylon protective pads are installed around the box, which is strong and beautiful, safe and environmentally friendly;

2. Hardware module configuration requirements:

1. AI core system

1) AI CPU core: CPU is 64-bit, not less than 4 cores;

2) AI GPU core: The number of GPU cores is not less than 100;

3) AI core expansion: has at least 4 USB3.0 interfaces, supports HDMI and DP video interfaces, a single PCIE with an M.2 interface, and is equipped with a cooling fan;

4) M*n control operating system: Ubuntu 18.04 LTS+ROS_Melodic;

5) Development environment (IDE): JupyterLab;

6) Virtual environment: Anaconda 4.5.4;

7) Wireless network card: supports 2.4GHz/5GHz, supports Bluetooth 4.2;

8) Supports a series of popular Al frameworks and algorithms, such as TensorFlow, caffe/caffe2, Keras, Pytorch, MXNET, etc.;

9) The system is installed with OpenCV computer vision library, TensorFlow AI framework, Pytorch AI framework;

10) Can complete algorithm experiments such as speech emotion recognition and AI vision to realize garbage sorting;

2. Robot kinematics and ROS robot system

1) Material: anodized aluminum alloy;

2) Servo solution: 15Kg*5+6Kg*1 intelligent serial bus servo;

3) Robotic arm degrees of freedom: 5 degrees of freedom + gripper, 200g payload, arm span 350mm;

4) Camera: USB interface, 300,000 pixels, 110-degree wide-angle camera, 480P resolution (600*480);

5) Interface: 6 bus servo interfaces, PWM servo interface, i2C interface, in addition to the AI core board, it also supports STM32 and Raspberry Pi;

6) OLED: Displays basic information such as CPU usage, memory usage, and IP address;

7) Supports 3 control methods: mobile phone APP (IOS/Android), PC host computer, and PS2 handle (PC end);

8) PC host computer supports FPV first-person perspective control and displays 3D simulation models . It can also simulate the robot arm in real time, display the robot arm movements or control the robot arm with 3D graphics;

9) Supports ROS robot operating system;

10) 1 RGB light;

11) Buttons: K1+K2 key+RESET key;

12) T-type power supply interface;

13) Mirco USB interface;

14) PS2 handle receiver seat;

3.AI hearing system

1) Based on the USB interface design, it adopts SSS1629 audio chip, which is driver-free and compatible with multiple systems;

2) Two high-quality MEMS silicon microphones on board can record left and right channels with better sound quality;

3) Onboard standard 3.5mm headphone interface, which can play music through external headphones;

4) Onboard dual-channel speaker interface, which can directly drive the speaker;

5) Onboard speaker volume adjustment button, which is convenient for adjusting the appropriate volume;

4. Speech emotion recognition

1) Virtual environment: Anaconda 4.5.4;

2) Algorithm development: CUDA, CUDNN, PyTorch, Tensorboard;

3) Deep model: Mobilenet_v2;

4) The feature used is to do STFT on the speech signal and convert it into a speech time-frequency graph;

5) The shortcut mechanism is introduced to reduce the gradient dissipation caused by the increase in network depth;

6) The output results show emotions and their probabilities, and display the picture effect;

7) Emotion types: no less than 5 categories;

5. Basic GPIO and sensor experiment module

1) Bi-color LED: 5mm red and green bi-color LED indicator light, with current limiting resistor;

2) Relay: 5V power supply, 1-way optocoupler isolation, support high/low level trigger;

3) Touch switch button: ordinary button switch, automatic reset;

4) U-type photoelectric sensor: use imported slot-type optocoupler sensor, slot width 10.5mm, with output status indicator light, digital switch output;

5) Analog-to-digital conversion: single power supply, low power consumption 8-bit COMS type A/D, D/A conversion chip, it has 4 analog input channels, one analog output channel and 1 I2C bus interface;

6) PS2 joystick: the module has two analog outputs and one digital output interface;

7) Potentiometer: 20K potentiometer;

8) Analog Hall sensor: input is magnetic induction intensity, with output status indicator, digital switch output and AO voltage output;

9) Photosensitive sensor: based on internal photoelectric effect, with output status indicator, digital switch output and AO voltage output;

10) Flame alarm: can detect flames or light sources with wavelengths in the range of 760nm-1100nm, the lighter test flame distance is 80cm, the detection angle is 60 degrees, and the flame detection response time is <1s;

11) Gas sensor: Metal oxide semiconductor (MOS) type gas sensor, can detect LPG, smoke, alcohol, propane, hydrogen, methane, and carbon monoxide, with concentrations from 200-10000PPM;

12) Touch switch: It uses a capacitive touch sensor and can be installed on the surface of non-metallic objects;

13) Ultrasonic sensor distance detection: The sensing angle is not more than 15 degrees, the detection distance is 2-450cm, and the accuracy can reach 0.3cm;

14) Rotary encoder: Both phases A and B output square waves. When rotating clockwise, phase A leads phase B by 90 degrees, and when rotating counterclockwise, phase B leads phase A by 90 degrees;

15) Infrared obstacle avoidance sensor: It is applicable to the reflection distance of 1mm~25mm;

16) Air pressure sensor: Pressure range: 300~1100hPa, resolution is 0.03hPa;

17) Gyroscope acceleration sensor: The chip has a built-in 16bit AD converter, 16-bit data output, gyroscope range: ±250 500 1000 2000 °/s, acceleration range: ±2±4±8±16g;

18) Tracking sensor: Detection distance 1-8mm, focal distance is 2.5mm;

19) DC motor fan module: working current 0.35-0.4A, motor shaft length 9mm;

20) Stepper motor driver module: step angle: 5.625 x 1/64, reduction ratio: 1/64;

21) Complete the WeChat applet control experiment based on the MQTT protocol, and provide the WeChat applet source code;

6. Supporting resources:

1) Display screen: 10-inch display screen, HDMI interface, resolution 1080P. The display screen is installed at an angle greater than 5°;

2) Keyboard and mouse: dry battery powered, Bluetooth connection;

3) Model trash can: size: ≥90*80*103mm;

4) Classification building blocks: no less than 4;

5) Supporting experiment guide: no less than 500 pages;

6) Provide code: no less than 50;

7) Provide artificial intelligence professional courseware and teaching materials;

7. Configure the Zhilan Cloud Testing System ( 1 set is configured in the laboratory

, and a demonstration video must be provided) (I) Requirements for the basic test part:

1) It can measure various analog components such as resistors, capacitors, diodes, triodes, thyristors, inductors, MOS tubes, etc., and can display the corresponding resistance, capacitance and inductance values of the devices, automatically identify pins, automatically perform range tests, and determine device types, pin polarity and magnification, etc.;

2) It can measure commonly used 74 series TTL level chips and CD series COMS level chips, and support more than 1,500 ICs;

3) For integrated devices, there is no need to enter the chip model, and it can automatically complete functions such as chip identification, testing and display results;

4) It can measure LED display devices, including LED light-emitting diodes, LED digital tubes and LED dot matrix blocks. When testing LED display devices, there is no need to consider the order of device pin arrangement, common anode or common cathode, etc., just insert all pins into the test socket. After pressing the test button, all intact segments and pixels of the device under test are lit up, and the presence of bad pixels, core brightness and brightness uniformity can be visually judged.

5) It can measure commonly used amplifier chips, comparator chips, time base circuit chips, driver chips, and photoelectric coupling chips.

6) The operation is simple, without complex operation and setting. You only need to place the device under test according to the instructions, one-button operation, and the test results will be displayed after 1~2 seconds.

7) Wireless mobile phone APP controls the output voltage: DC 1-22V continuously adjustable;

8) Voltage display: resolution 0.05V, range 0-22V, accuracy ±0.1V;

9) Current display: resolution 0.005A, range 0-4.5A, accuracy ±0.05A, conversion efficiency above 90%;

10) Supports two voltage setting methods: manual button and smart phone APP wireless control;

11) APP end function: real-time monitoring and viewing of output voltage, output current, output power, and input voltage. Wireless control to turn on/off the power, wireless voltage setting and other functions;

(II) Requirements for the cloud test system:

1) The cloud test system is connected to the MCU serial port, and the MCU operation status can be viewed remotely, log filtering can be performed, and special reminders can be given for warnings and error messages;

2) The WeChat applet can be used to view the measured values, and the mobile phone and computer can be displayed simultaneously;

3) MQTT connects to the server, and the measured values can be viewed at any time with the mobile phone;

4) Networking function: The mobile phone and the device communicate through the MQTT server, and the measured values can be viewed anytime and anywhere without distance restrictions;

5) APP function: measurement history data list, historical measurement values can be viewed by time;

6) APP function: monitoring upper and lower limits, and upper and lower limit values can be set;

7) APP function: measurement value sharing, tap a single data in the data list to copy it to the clipboard, and then quickly paste it to other chat tools to share it with him;

8) The PC and mobile phone can open the WeChat applet to monitor the measurement at the same time;

9) Voice broadcast: Press the voice broadcast button of the tester, and the WeChat applet client will play the current measurement;

10) Built-in rechargeable battery capacity 1200mAh;

11) Charging current <300mA;

12) Equipment use time: >15 hours (20-30℃);

13) LAN uses UDP communication, which can realize simultaneous monitoring of multiple mobile phones/computers;

14) The measured data can be stored in the cloud for analysis;

15) Unmanned monitoring of upper and lower limit abnormal alarms;

16) The measured value can be converted into other unit values such as PT100, 4-20mA, 0-5V, etc.;

17) Voice broadcast of current measured value and upper and lower limit alarms;

18) Measurement parameter indicators:

a. DC voltage: range ±300V, accuracy ±0.5%

b. AC voltage: range 300V, accuracy ±2%

c. DC current: range ±250mA, accuracy ±1%

d. AC current: range 250mA, accuracy ±2%

e. DC current: range 2.5A, accuracy ±1%

f. AC current: range 2.5A, accuracy ±2%

g. Capacitance: range 10uF, accuracy ±3%

h. Resistance: range 5MΩ, accuracy ±0.5%

8. Configure the laboratory management system (demonstration video required)

1) Teachers/students can use the user management function to modify and view their own information; they can use the experimental course and equipment management function to inquire about experimental courses and related information and make reservations for laboratories; they can use the equipment borrowing management function to inquire about equipment borrowing status;

2) Administrator workflow: The administrator is a built-in user of the system. Enter the user name and password, log in to the system after system verification, and enter the homepage;

3) The administrator can perform user management, laboratory management, equipment borrowing management, equipment m*ntenance management, experimental course and equipment management, notification management and system reports;

4) The basic hierarchical structure of the system must include: background data layer, intermediate business layer, database access interface, comprehensive front-end service, TCP/IP protocol communication interface, and front-end application layer;

5) The intelligent management system must include the following seven functions, namely notification management, user management, laboratory management, laboratory course and equipment management, equipment borrowing management, equipment m*ntenance management, and report statistics functions;

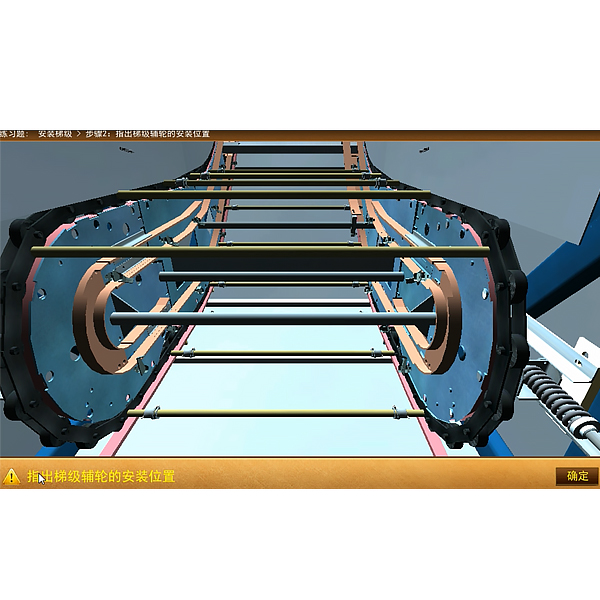

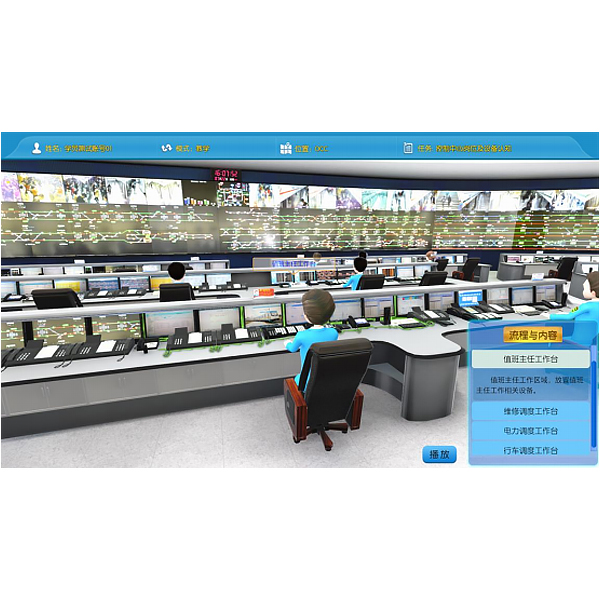

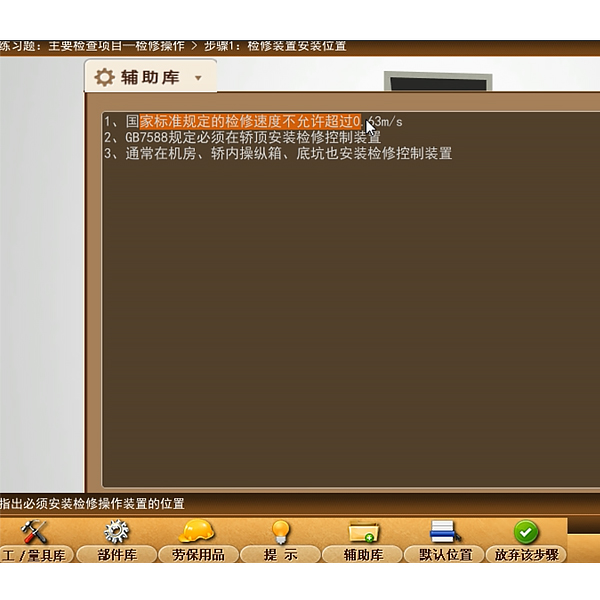

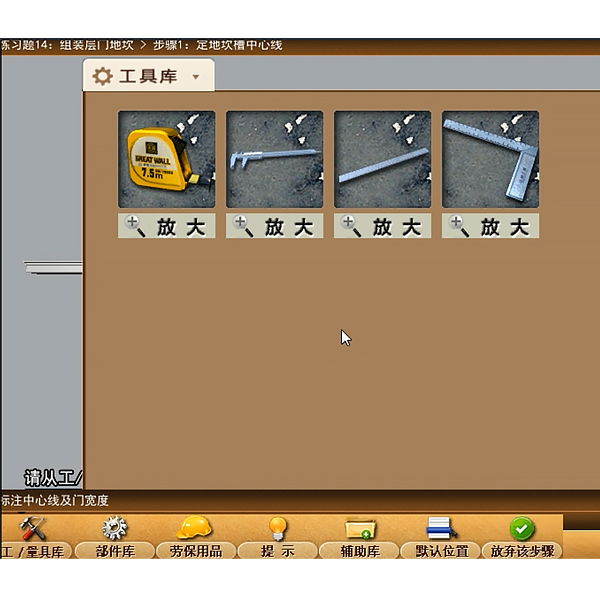

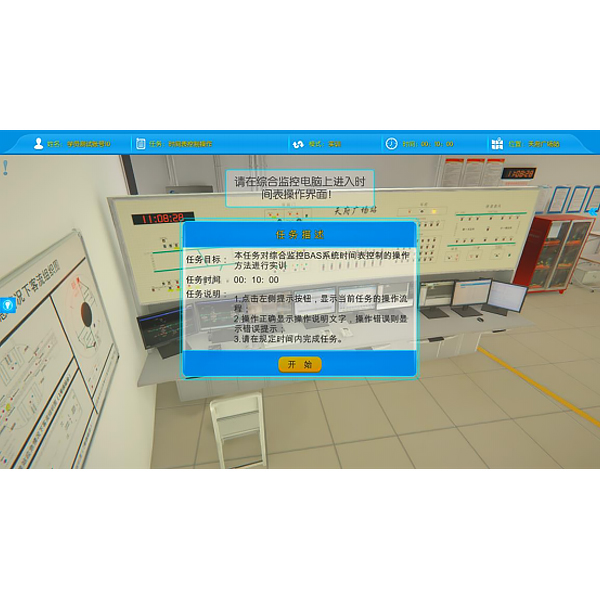

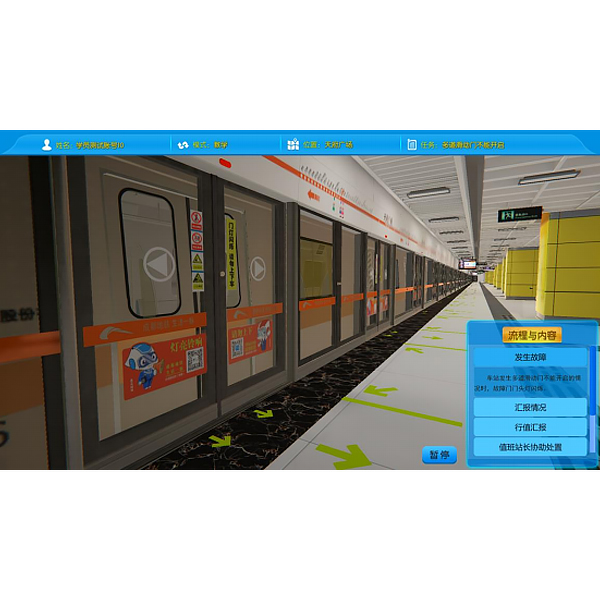

9. Single chip microcomputer, PLCProgrammable design and control virtual simulation software (copyright certificate and demonstration video are required):

1) This software is developed based on unity3d, with built-in experimental steps, experimental instructions, circuit diagrams, component lists, connection lines, power on, circuit diagrams, scene reset, return and other buttons. After the connection and code are correct, the 3D machine model can be operated by the start/stop, forward motion, and reverse motion buttons. When the line is connected, the 3D machine model can be enlarged/reduced and translated.

2) Relay control: Read the experimental instructions and enter the experiment. By reading the circuit diagram, select relays, thermal relays, switches and other components in the component list and drag and drop them into the electrical cabinet. The limiter is placed on the 3D machine model. You can choose to cover the cover. Some component names can be renamed. Then click the connection line button to connect the terminals to the terminals. After the machine circuit is successfully connected, choose to turn on the power and operate. If the component or line connection is wrong, an error box will pop up, and the scene can be reset at any time.

3) PLC control: The experiment is the same as relay control, with the addition of PLC control function. After the connection is completed, press the PLC coding button to enter the program writing interface, write two programs, forward and reverse, with a total of 12 ladder diagram symbols. After writing, select Submit to verify the program. After successful verification, turn on the power to operate. Error boxes will pop up for components, line connections, and code errors, and the scene can be reset at any time.

4) MCU control: The experiment is the same as relay control, with the addition of MCU control function. After the connection is completed, press the C coding button to enter the programming interface, enter the correct C language code, and submit for successful verification. Turn on the power to operate. Error boxes will pop up for components, line connections, and code errors, and the scene can be reset at any time.

3. Experimental project requirements:

1. Experiment on controlling each servo separately

2. Experiment on servo memory action

3. Synchronous teaching of mechanical arm

4. No less than 8 fixed action groups of the mechanical arm

5. Customized learning action group of the mechanical arm

6. AI visual color recognition and grabbing

7. AI visual color recognition sorting and stacking

8. AI visual positioning

9. AI visual garbage sorting

10. Open CV geometric transformation

11. Open CV image processing and drawing text segments

12. Open CV read, write and save images

13. Open CV image beautification

14. Open CV read, write and save videos

15. Open CV p*nting function

16. Open CV color detection

17. Open CV face and eye detection

18. Open CV pedestrian detection

19. Open CV car detection

20. Open CV license plate detection

21. Open CV real-time position of the object

22. Open CV camera gimbal object tracking

23. Open CV camera gimbal face tracking

24. Open CV motion detection and tracking

25. Open CV face recognition

26. Image recognition practice based on convolutional neural network model

27. Deep learning model

28. Gesture recognition, expression recognition and facial feature recognition based on Pytorch

29. AI online speech synthesis experiment

30. AI speech dictation streaming experiment

31. Turing robot experiment

32. AI full-link human-computer interaction speech experiment

33. AI robot speech dialogue experiment

34. SnowBoy speech wake-up experiment

35. Speech emotion recognition

36. Internet of Things experiment based on MQTT protocol

37. Internet of Things experiment based on Buffa Cloud

38. Internet of Things experiment based on Alibaba Cloud

39. Internet of Things mobile phone experiment based on WeChat applet

40. Internet of Things smart light experiment

41. Internet of Things smart fan experiment

Provide no less than 20 GPIO control and sensor experiment modules

Wechat scan code follow us

Wechat scan code follow us